Full Program »

T5: Adversarial Machine Learning

Tuesday, 5 December 2017

13:30 - 17:00

Salon II

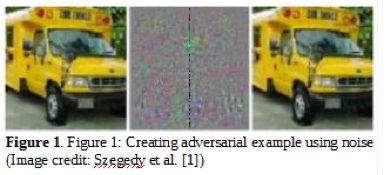

Machine learning based system are increasingly being used for sensitive tasks such as security surveillance, guiding autonomous vehicle, taking investment decisions, detecting and blocking network intrusion and malware etc. However, recent research has shown that machine learning models are venerable to attacks by adversaries at all phases of machine learning (e.g., training data collection, training, operation). Machine learning systems used for classification tasks (e.g., that classify inputs to one of the several possible classes) can be misled by providing carefully crafted samples that look normal to humans but an otherwise efficient machine learning system would wrongly classify them. Recent research has shown that all model classes of machine learning systems are venerable to such attacks. Maliciously created input samples can affect the learning process of a ML system by either slowing the learning process, or affecting the performance of the learned model or causing the system make error only in attacker’s planned scenario. For example, researchers have demonstrated [1], how to fool an image classification system by making tiny changes to the input images. Figure 1 shows three images. On the left is an image that the system correctly classifies as a school bus. Image in center is a noise which when added to the left image creates an image shown on the right which still looks like a school bus to a human observer. But the system now classifies this image (right) as an ostrich. These techniques can be used by hackers to evade the system in making it accept malicious content as a genuine one.

In addition to misleading machine learning systems, hackers may also be interested in ‘sealing’ the model of a machine learning system which may be a trade-secret. Tramer et al demonstrated at USENIX Security Symposium 2016 [2] that models can be extracted from popular online machine learning services such as BigML and Amazon Machine Learning with a relatively small number of API calls. Many machine learning systems depend on user provided inputs for continuous training. For public online systems, a user can be anyone in the world. This opens a machine learning system to malicious inputs created by hackers to ‘poison’ the system. Microsoft’s twitter chatbot Tay started tweeting racist and sexist tweets in less than 24 hours after it was opened to public for learning [3].

With machine learning based system becoming more popular in many applications such as computer vision, speech recognition, natural language understanding, recommender systems, information retrieval, computer gaming, medical diagnosis, market analysis etc., it becomes very important to understand the security risks these systems face and techniques to make these systems robust against various attacks. In this course, we would cover some known weaknesses of traditional machine learning systems, attack categories, possible attacks and techniques to build robust machine learning systems. We would cover different categories of attacks on machine learning systems (depending on the objective of attacker) such as exploratory attacks (to ‘steal’ the backend model), evasive attacks (to evade the classification system by misleading it using carefully altered inputs), and poisoning attacks (to change the model itself by providing inputs that can ‘poison’ the system fast). Since this is an emerging area, we would use recently published research in this areas as study material for this course.

Prerequisites:

- Basic Machine Learning. Understanding of basic machine learning concepts such as Linear Regression, Introductory Neural Networks, Support Vector Machines, Anomaly Detection and Introductory Deep Learning.

Outline:

- Introduction: Security in Machine Learning and Classification systems (1 hour)

- Recap of basic machine learning models, particularly Neural Networks and Support Vector Machines, Training and Test data. (20 min)

- Early work on the security of classification systems in the areas of email spam filters, virus/malware detection and network intrusion detection. (20 min)

- Examples of misleading modern machine learning system by specifically crafted adversarial inputs. (20 min)

- Weakness of machine learning system and attacks (1 hour)

- Intriguing properties of neural networks and support vector machines that makes them venerable to attacks. (20 min)

- Model Extraction using online APIs (10 min)

- Generating Adversarial Examples to evade (10 min)

- Query Strategies for Evasion (10 min)

- Attacking Text Classification Systems (10 min)

- Making Machine Learning Systems Robust (1 hour)

- Generative adversarial networks (GAN) and adversarial training (15 min)

- Defensive Distillation for defense training (20 min)

- Evaluating Security of machine learning systems against various attacks categories (15 min)

- Conclusion and discussion on some emerging research directions. (10 min)

References and Recommended Readings:

- C. Szegedy, W. Zaremba, I. Sutskever, J. Bruna, D. Erhan, I. Goodfellow, and R. Fergus. “Intriguing Properties of Neural Networks”. https://arxiv.org/pdf/1312.6199v4.pdf

- Florian Trame r, Fan Zhang, Ari Juels, Michael K. Reiter, and Thomas Ristenpart. “Stealing Machine Learning Models via Prediction APIs”. USENIX Security Symposium 2016.

- Reuters. “Microsoft's AI Twitter bot goes dark after racist, sexist tweets”. 24 March 2016. http://www.reuters.com/article/us-microsoft-twitter-bot-idUSKCN0WQ2LA

- Giuseppe Ateniese, Luigi V. Mancini, Angelo Spognardi, Antonio Villani, Domenico Vitali, and Giovanni Felici. “Hacking smart machines with smarter ones: How to extract meaningful data from machine learning classifiers”. International Journal of Security and Networks (IJSN), 10(3), 2015.

- Nicolas Papernot, Patrick McDaniel, Ian Goodfellow, Somesh Jha, Z. Berkay Celik, and Ananthram Swami. “Practical Black-Box Attacks against Machine Learning”, ACM Asia Conference on Computer and Communications Security (ASIACCS), April 2017.

- Ian J. Goodfellow, Jonathon Shlens, and Christian Szegedy. “Explaining and Harnessing Adversarial Examples” International Conference on Learning Representations (ICLR) 2015.

- Nicolas Papernot, Patrick McDaniel, Somesh Jha, Matt Fredrikson, Z. Berkay Celik, and Ananthram Swami. “The Limitations of Deep Learning in Adversarial Settings”. IEEE European Symposium on Security and Privacy (Euro S&P) 2016.

- Seyed-Mohsen Moosavi-Dezfooli, Alhussein Fawzi, Pascal Frossard. “DeepFool: A Simple and Accurate Method to Fool Deep Neural Networks”. Conference on Computer Vision and Pattern Recognition (CVPR) 2016.

- Ian J. Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. “Generative Adversarial Networks”. https://arxiv.org/pdf/1406.2661.pdf4

- Nicolas Papernot, Patrick McDaniel, Xi Wu, Somesh Jha, and Ananthram Swami. “Distillation as a Defense to Adversarial Perturbations Against Deep Neural Networks”. IEEE Symposium on Security and Privacy (SP) 2016.

- Takeru Miyato, Andrew M. Dai, and Ian Goodfellow. “Adversarial Training Methods for Semi-Supervised Text Classification”. International Conference on Learning Representations (ICLR) 2017.

- Erica Klarreich. “Learning Securely”. Communications of the ACM (CACM), 59(11), 12-14, November 2016.

- Bin Liang, Hongcheng Li, Miaoqiang Su, Pan Bian, Xirong Li, and Wenchang Shi. “Deep Text Classification Can be Fooled”. arxiv: https://arxiv.org/abs/1704.08006

About the Instructor:

Dr. Atul Kumar is a senior researcher at IBM Research, India. His primary research interests are in the areas of adversarial machine learning, data engineering, distributed systems, software engineering and Internet technologies. Atul received his Masters and Ph.D. in Computer Science and Engineering from IIT Kanpur in 1998 and 2003 respectively. He has over 15 years of experience working in leading research organizations that include IBM Research, Siemens Corporate Technology, Microsoft R&D, Accenture Technology Labs, and ABB Corporate Research. He has published over 30 papers in leading Computer Science and Automation systems journals and conferences. He is a senior member of both IEEE and ACM.

Atul co-conducted a tutorial on “Process Mining Software Repositories” at COMAD 2014. He also co- conducted a tutorial on “Software Process Intelligence” at ISEC 2015. Atul is curranty the steering committee co-chair for ISEC conference series of ACM India SIGSOFT. He is co-organizing SEM4HPC workshop at HPDC 2017. In past, he has co-organized SEM4HPC workshop at HPDC 2016, MoSEMInA workshop at ICSE 2014, Industry Session at ICDCIT 2011 and Workshop on SaaS & Cloud Computing at ISEC 2008.

Atul has been part of the organizing committees of several academic conferences. He was Industry Track co-chair for ISEC 2017, Workshop Chair for ISEC 2016, Tutorial Chair for ICIIS 2014. PC co-chair (Communication technologies) for HIS 2005, and co-Chair (System) for ACM ICPC, Asia Region (Kanpur), for the years 1999, 2000, 2001 and 2003.